What is Hdr for Monitors and is It Worth It?

High Dynamic Range (HDR) is an increasingly popular feature when it comes to monitors and TVs. But what exactly is HDR and is it worth the extra cost?

In this article, we’ll take a look at what HDR can do and whether or not it’s worth investing in. HDR promises to provide viewers with greater depth, contrast, and color than regular monitors. It can produce deeper blacks, brighter whites, and more vibrant colors than non-HDR displays.

So if you’re looking for an improved viewing experience, then HDR might be a good option for you. Read on to find out more about HDR and whether it’s worth it!

What Does Hdr Do?

High Dynamic Range (HDR) is a type of technology that is used to improve the quality of images viewed on monitors. It works by increasing the amount of color, contrast, and brightness that the monitor can display. This gives viewers a more realistic viewing experience with deeper blacks, brighter whites, and more vibrant colors.

HDR also enhances details in dark areas of an image, making it easier for viewers to make out information in those areas. To take advantage of HDR technology, monitors must be compatible with an HDR format such as HDR10 or Dolby Vision. Both formats are designed to provide an enhanced viewing experience but they differ in terms of their capabilities.

For instance, Dolby Vision supports higher peak brightness levels than HDR10 which results in a brighter picture overall. Additionally, Dolby Vision offers improved color accuracy compared to HDR10 and can offer up to 12-bit color depth instead of the 10-bit color depth offered by HDR10. Whether or not it is worth investing in an HDR monitor depends on how you plan to use it and your expectations for image quality.

If you are looking for a monitor that will give you a more realistic viewing experience with improved contrast and detail then investing in an HDR monitor can be worth it. However, if you just want something basic then you may be better off sticking with a regular non-HDR monitor. With this in mind, let’s look at the different types of HDR formats available: HDR10 vs Dolby Vision.

Hdr Formats: Hdr10 Vs Dolby Vision

Making the move from the traditional SDR display to HDR can be a perplexing proposition. Picking out pixels and choosing between the various formats on offer can be confusing for those not in the know. Nevertheless, understanding HDR and its various formats is key to making an informed decision about whether it is worth the investment for your monitor setup.

The two main HDR formats – HDR10 and Dolby Vision – both boast improved color accuracy across a greater range of brightnesses, allowing for increased vibrancy and detail that was previously not possible with SDR displays.

* **HDR10**:

* Uses static metadata that applies to an entire video or scene;

* Utilizes 10-bit color depth (1 billion colors);

* Supports 4K resolution at up to 60 frames per second;

* Supported by a wide range of devices, including gaming consoles, Blu-ray players, streaming sticks, and more.

* **Dolby Vision**:

* Uses dynamic metadata that adjusts to each frame or scene;

* Supports 12-bit color depth (68 billion colors);

* Up to 4K resolution at up to 120 frames per second;

* Generally requires dedicated hardware for decoding Dolby Vision content;

* Currently only supported by select TVs and streaming boxes.

Ultimately, the choice between HDR10 and Dolby Vision comes down to personal preference and available hardware support. Whether either format is worth it will depend on the user’s individual preferences regarding image quality, budget constraints, and existing equipment setup.

The next step then is looking into what display standards are required in order to take full advantage of these HDR formats.

Display Hdr Standards by Vesa

High Dynamic Range (HDR) is an advanced display technology that can significantly improve the visual experience on a monitor. The Video Electronics Standards Association (VESA) has developed several DisplayHDR standards, which are designed to help manufacturers and consumers understand what they can expect from HDR-enabled displays.

The highest level of VESA’s standards is DisplayHDR 1000, which requires a peak brightness of 1,000 nits and wide color gamut capabilities. This makes it ideal for viewing content such as HDR games or movies in vibrant colors and high contrast with deep black levels.

DisplayHDR 400 is the next tier down from DisplayHDR 1000, and it requires a peak brightness of 400 nits and 8-bit color. Still, this doesn’t mean that DisplayHDR 400 can’t deliver great visuals; it just means that the performance won’t be as impressive as DisplayHDR 1000. Displays with these specifications are better suited for general use like web browsing or watching normal content.

Whether or not HDR is worth it really depends on what you plan to do with your monitor. If you want to watch HDR content or play games with enhanced visuals, then investing in a DisplayHDR 1000 monitor will definitely be worth it. However, if you only need a monitor for standard tasks like web browsing or streaming videos, then something like a DisplayHDR 400 might be more suitable and cost-effective.

Moving on to local dimming…

Local Dimming

Local Dimming is a technology that allows for improved image quality and contrast on monitors.

It has several benefits, such as better contrast and deeper blacks, as well as improved energy efficiency.

The technology works by dimming the backlight of certain sections of the monitor, allowing for deeper blacks and more accurate color reproduction.

It’s definitely worth it for those who want the best image quality on their monitors.

Local Dimming Benefits

Local dimming is a feature used in LCD and OLED television sets, as well as computer monitors, to improve the contrast ratio. It works by turning off certain sections of the backlight to darken parts of the image while keeping other sections bright. This helps to create a more natural-looking image with deeper blacks and brighter whites.

The main benefit of local dimming is that it can produce much higher contrast ratios than non-local dimmed displays, which can be quite noticeable when watching dark scenes.

There are two major types of local dimming: full array and edge-lit. Full array local dimming utilizes an array of LEDs directly behind the panel to control brightness in different areas; this type typically provides better overall picture quality but is more expensive than edge-lit models. Edge-lit models have LEDs placed around the edges of the display, providing less precise control over brightness levels but at a lower cost.

No matter what type you choose, though, the local dimming can provide a dramatic improvement in picture quality for both movies and video games; however, it’s important to note that some displays may not support local dimming or may only offer limited implementation due to hardware constraints. As such, it’s important to research any potential purchases thoroughly before making your final decision.

Local Dimming Technology

Local dimming technology is a great way to improve the contrast ratio of LCD and OLED television sets, as well as computer monitors.

It works by turning off certain sections of the backlight to darken parts of the image while keeping other sections bright. This allows for much deeper blacks and brighter whites than non-local dimmed displays can offer.

There are two main types: full array and edge-lit. Full array local dimming utilizes an array of LEDs directly behind the panel for improved picture quality, but it’s more expensive than edge-lit models.

Edge-lit models have LEDs around the edges of the display, providing less precise control over brightness levels but usually at a lower cost. So no matter what type you choose, the local dimming can greatly enhance your viewing experience at home without breaking the bank.

Hdr Gaming

Having discussed local dimming, it is now time to investigate the truth of a theory about HDR for monitors, and whether it is worth it.

High Dynamic Range (HDR) is a technology that can create very bright whites and deep blacks on your monitor. This means that details in shadows are much clearer and brighter highlights appear more vibrant. It also brings with it wider color gamuts which can make images look more lifelike than with traditional SDR or Standard Dynamic Range displays.

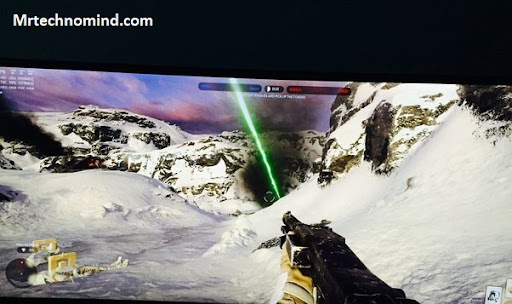

With these improvements in picture quality, many gamers have begun to transition to HDR displays for gaming. HDR gaming has become increasingly popular, as HDR-capable monitors have become cheaper and more widely available.

For those wanting the highest level of image quality, an HDR display may be worth the investment. Some games also allow players to customize their settings to get the best experience out of their monitor’s capabilities. However, not all games support HDR yet, so if you’re looking for improved visuals from your games then you should check if they support this technology before purchasing an HDR display.

In addition to improved image quality, there are other features that gamers should consider when purchasing an HDR monitor such as FreeSync Premium Pro & G-Sync Ultimate. These technologies offer low latency and variable refresh rates that help reduce stuttering and ghosting in fast-paced games. They also enable smoother gameplay overall which makes them ideal for competitive gamers who need every advantage they can get.

So while an HDR monitor may cost more upfront, its performance benefits could be well worth it in the long run.

Freesync Premium Pro & G-sync Ultimate

HDR, or High Dynamic Range, is a feature available on some monitors that allow for an increased range of contrast and color depth. It enables a broader range of colors to be displayed on the screen, allowing for more vibrant images and videos with greater detail in both dark and bright areas.

| Feature | FreeSync Premium Pro | G-Sync Ultimate |

| Sync Technology | Uses Adaptive-Sync technology to sync the graphics card and monitor | Proprietary hardware-based technology provides precise frame timing |

| HDR Support | Supports HDR10 and HDR content playback with a minimum peak brightness level of 400 nits, local dimming, and wide color gamut (WCG) | Supports HDR10, Dolby Vision, and HDR content playback with a minimum peak brightness level of 1000 nits and local dimming |

| Variable Refresh Rate (VRR) | Offers a VRR range of at least 120Hz and supports Low Framerate Compensation (LFC) | Offers a VRR range of at least 144Hz with no support for LFC |

| Performance | Optimized for smoother gameplay with reduced screen tearing and stuttering | Optimized for ultra-high-end gaming with minimal screen tearing and stuttering |

| Certification Process | A less stringent certification process than G-Sync Ultimate | A more rigorous certification process than FreeSync Premium Pro |

FreeSync Premium Pro and G-SYNC Ultimate are two of the most popular technologies used in conjunction with HDR, allowing for smooth visuals without tearing or lag.

The main benefit of using FreeSync Premium Pro is that it offers low latency at up to 120Hz refresh rate, providing a smoother gaming experience compared to conventional displays. Additionally, it can reduce ghosting effects on fast-paced games and has support for HDR content from both AMD and NVIDIA graphics cards.

G-SYNC Ultimate is also an excellent option for gamers who want the best performance from their monitor. This technology provides up to 144Hz refresh rates with minimal input lag as well as support for HDR content from both NVIDIA and AMD graphics cards. Moreover, it supports a variable overdrive setting which helps reduce motion blur when playing fast-paced games. In terms of features, G-SYNC Ultimate has everything gamers need to get the most out of their gaming setup:

- Up to 144 Hz Refresh Rates

- Minimal Input Lag

- Support For Variable Overdrive Setting

- Support For Both AMD & NVIDIA Graphics Cards

- High Dynamic Range (HDR) Content Support

Overall, whether you choose FreeSync Premium Pro or G-SYNC Ultimate will depend on your specific needs and preferences when it comes to performance and features. Both technologies offer great options when it comes to gaming but depending on your budget, one may be more suitable than the other.

Ultimately, having an HDR monitor along with either technology will ensure you get the best visuals possible for your gaming setup.

Frequently Asked Questions

1. What is the Difference Between Hdr and Sdr?

HDR, or High Dynamic Range, and SDR, or Standard Dynamic Range, are two different kinds of display technology.

HDR is a newer technology that has been gaining popularity in recent years due to its ability to produce much higher levels of contrast and color accuracy than SDR displays.

It’s also capable of displaying more detail in darker and brighter areas of an image than SDR can, making it particularly well-suited for viewing movies and other content with a wide dynamic range.

The downside is that HDR displays cost more than SDR displays.

2. Can I Use Hdr on My Current Monitor?

It’s possible to bring the vibrant colors of HDR to your current monitor, but it won’t be quite the same as using a dedicated HDR-enabled display.

While your monitor may be able to show an HDR signal, it may not have the full range of brightness and contrast necessary to make the most out of the technology.

That said if you’re looking for a quick way to get a taste of what HDR has to offer, giving it a try on your existing setup isn’t a bad idea.

3. What Are the Benefits of Hdr Compared to Regular Displays?

HDR stands for High Dynamic Range and is a type of display technology that helps create an enhanced viewing experience.

Compared to regular displays, HDR offers a wider range of colors and contrast, giving images more depth and detail.

It also increases the brightness level, making it easier to see in bright environments.

Additionally, HDR monitors tend to have better color accuracy, so you can enjoy more vivid visuals with greater clarity.

4. What Kind of Graphics Card Do I Need to Take Advantage of Hdr?

If you’re looking to take advantage of HDR, you’ll need a graphics card with at least DisplayPort 1.4.

This is because it’s the only port that supports HDMI 2.0b, which is needed for 4K/HDR resolution and refresh rates up to 60Hz.

Make sure your card has support for 10-bit color depth as well since this will enable you to get the most out of HDR visuals.

Additionally, your monitor also needs to have an HDR-compatible display in order for you to reap the benefits of this technology.

5. Are There Any Hdr Specific Settings I Need to Adjust?

HDR-capable monitors allow for a wider range of color and brightness levels than traditional displays.

In fact, the range of brightness in HDR monitors can be up to 2000 nits, compared to the 300-400 nit standard of conventional displays.

To take advantage of this expanded range, there are some specific settings that should be adjusted on HDR-compatible monitors.

These include changing the black level, gamma curve, and peak luminance.

Additionally, users may need to adjust their display’s color space settings and apply tone mapping to ensure they are getting the most out of their HDR monitor.

Conclusion

In conclusion, HDR is a great way to take your monitor viewing experience to the next level.

If you have a compatible graphics card and monitor, it’s definitely worth giving HDR a try.

You’ll be able to enjoy better contrast, more vibrant colors, and deeper blacks – all of which will make your content look like it popped out of the screen!

Plus, you can even tweak settings like brightness and saturation for an even more immersive experience.

By utilizing HDR technology, you’ll be living in the future with crystal-clear visuals that will make you feel like you’re right there in the action!